Explaining concurrency vs. parallelism – Concurrency – Virtual Threads, Structured Concurrency

209. Explaining concurrency vs. parallelism

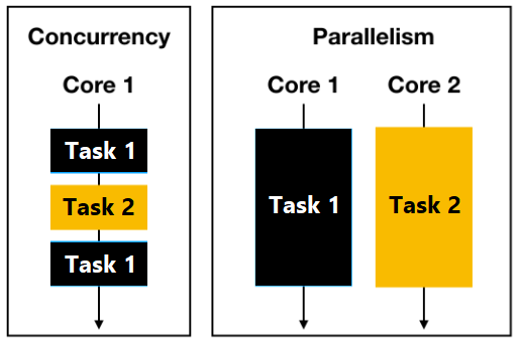

Before tackling the main topic of this chapter, structured concurrency, let’s forget about structure and let’s keep only concurrency. Next, let’s put concurrency against parallelism since these two notions are often a source of confusion.Both of them, concurrency and parallelism, have tasks as the main unit of work. But, the way that they handle these tasks makes them so different.In the case of parallelism, a task is split into subtasks across multiple CPU cores. These subtasks are computed in parallel and each of them represents a partial solution for the given task. By joining these partial solutions, we obtain the final solution. Ideally, solving a task in parallel should result in less wall-clock time than in the case of solving the same task sequentially. In a nutshell, in parallelism, at least two threads are running at the same time which means that parallelism can solve a single task faster.In the case of concurrency, we try to solve as many tasks as possible via several threads that compete with each other to progress in a time-slicing fashion. This means that concurrency can solve multiple tasks faster. This is why concurrency is also referenced as virtual parallelism.The following figure depicts parallelism vs. concurrency:

Figure 10.1 – Concurrency vs. paralellism

In parallelism, tasks (subtasks) are part of the implemented solution/algorithm. We write the code, set/control the number of tasks, and use them in a context having parallel computational capabilities. On the other hand, in concurrency, tasks are part of the problem.Typically, we measure parallelism efficiency in latency (the amount of time needed to complete the task), while the efficiency of concurrency is measured in throughput (the number of tasks that we can solve).Moreover, in parallelism, tasks are controlling resource allocation (CPU time, I/O operations, and so on). On the other hand, in concurrency, multiple threads compete with each other to gain as many resources (I/O) as possible. They cannot control resource allocation.In parallelism, threads operate on CPU cores in such a way that every core is busy. In concurrency, threads operate on tasks in such a way that, ideally, each thread has a task.Commonly, when parallelism and concurrency are compared somebody comes and says: How about asynchronous methods?It is important to understand that asynchrony is a separate concept. Asychorny is about the capability to accomplish non-blocking operations. For instance, an application sends a HTTP request but it doesn’t just wait for the response. It goes and solves something else (other tasks) while waiting for the response. We do asynchronous tasks every day. For instance, we start the washing machine and then go to clean. We don’t just wait by the washing machine until it was finished.